Co-founder of ‘Black in AI’, Timnit Gebru spoke at the AI for Good Global Summit about the methods and challenges associated with transparency in AI.

The AI for Good Global Summit is the leading United Nations platform for global and

inclusive dialogue on AI. The Summit is hosted each year in Geneva by the ITU in partnership with UN Sister agencies, XPRIZE Foundation and ACM. This year (4-8 May 2019), Innovation News Network attended the summit to find out what is currently happening within the AI industry.

Timnit Gebru, computer scientist and technical co-lead of the Ethical Artificial Intelligence

Team at Google, is also co-founder of ‘Black in AI’ (BAI). BAI focuses on the ideas,

collaborations and initiatives to increase the presence of Black people in the field of

Artificial Intelligence. This year at the summit, Gebru was in attendance and spoke about

the methods and challenges associated with transparency in AI.

Gebru started her speech by addressing the concept behind her PhD thesis: “the work was

on using Google Street View images to predict our demographic characteristics. We

detected classified pictures of cars, 15m images in 200 of the most populous states in the

US, and we used this information to do a proxy census. We wanted to figure out aspects

such as education, income and income segregation. We got super excited by what we could

predict using Google images, and one of them was crime rates; you can see actual crime

rates versus crimes that were predicted by our algorithm.”

Interestingly, Gebru’s algorithm demonstrated the difference between these two systems.

For example, the crime rates in Oakland were spread out across the city. However, what the algorithm predicted was the opposite; the Oakland crime rates were not very spread out (mostly Puerto Rican, Black and Brown people).

Trusting AI tools to change people lives

Gebru went on mention how an US initiative proposed by Immigration and Customs

Enforcement in the US, aimed to partner with tech companies to use social network data

and determine if someone would make a good citizen or not. Gebru said: “The first question is should we do this kind of thing in the first place? The second question is, are the AI tools that we have robust enough to do this kind of high stakes scenarios analysis in terms of determining people’s lives? The answer is no. For example, google translate translated a good morning post which attacked them. As a result, this person who said good morning in Arabic got arrested, and she was later let go. We cannot trust our tools to do the kind of predictive analysis that will change people’s lives.

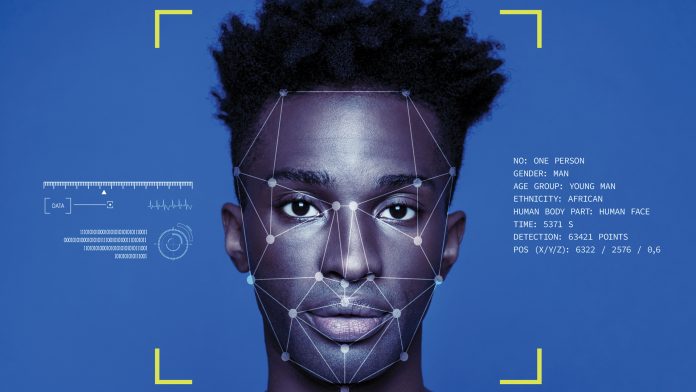

“I work a lot on analysing how these systems are used in the real world. We analyse

commercial gender classification systems; what these systems do is that they have a picture of a face, and they say whether this face is that of a by male or female – it’s a binary classification. Many people have actually written about how these gender classifications should not exist in the first place. When we were analysing these systems, we found that as you go darker and darker in skin type, it’s about 50% accurate. As you go lighter and lighter in skin type you get closer performance. The reason for this is data. We were doing this kind of analysis, we wanted to use data sets that were already use for commercial face recognition systems, or data sets that were overwhelmingly lighter skinned. As a result, we had to create our own data set that was more balanced in skin type and gender.”

Gebru went onto explain that their focus was on skin type instead of race because “race is a very is a social construct that is unstable across time and space. I think this is why it’s

important to understand that it’s very important to bring researchers from different

backgrounds to the table. My collaborator and I are both black women in the US, and we

understand the effects of colourism for example. That’s why we wanted to work with skin

type.

“We used a skin type classification system that is used by dermatologists for our analysis.

Therefore, we found some huge disparities in areas of skin type and gender. These papers

brought up a whole bunch of things, you know, there were people calling for regulation of

identification analysis tools, a whole bunch of companies came out with different tools, etc.

We can’t ignore social structure problems

“The first lesson for me, is as researchers, we can’t just be soldiers; we can’t ignore social

structure problems. The people that are unfairly targeted by technology are usually people

who are vulnerable.” Gebru went on to show a photograph from a machine learning

conference where only men were shown. Gebru said: “We can’t have this; a group of

researchers who don’t represent the world, working on the technology that is being used by

everybody, on everybody.

“This is super important, because the bias that I just talked about, there was a follow up

work that showed that a similar bias exists in Amazon’s recognition system, which is also

being sold in main law enforcement, and that the lead author of that paper, told me that

she was going to drop out of this whole field until she found black in AI as a distribution.

Black in AI – regulation is starting to happen

“As a result, I co-founded ‘BAI’ with my colleague as we find that it’s really important to

address these kinds of structural issues. There are no more restrictions on who can use AI –

recently San Francisco announced that they banned on the unofficial analysis tools, usage of AP innovation analysis tools by certain governmental agencies. Regulation is starting to

happen; however, we don’t really have anything comprehensive right now.

“When we’re talking about bias, it enters in many different ways First, is just what we

believe what profits to work, what research questions to ask, that is already a bias. When

we collect training and validation data, when we architect our models, and when we analyse how our models are used – these gender customer systems that I talked about, what does it mean for that to work for? For people in the research community, these shouldn’t exist in the first place.”

Timnit Gebru

Co-founder

Black in AI

tgebru@gmail.com

https://blackinai.github.io/