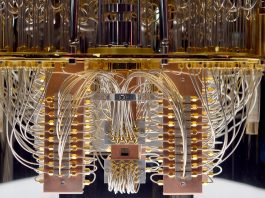

University of Southern California researchers are leveraging techniques to manage error accumulation to demonstrate the potential of quantum computing in the error-prone NISQ era.

Quantum computers have the potential to solve problems with an advantage that increases as the complexity of a problem grows.

However, they are also prone to errors and noise.

The challenge is “to obtain an advantage in the real world where today’s quantum computers are still ‘noisy,’” explained Daniel Lidar, the Viterbi Professor of Engineering at USC and Director of the USC Centre for Quantum Information Science & Technology.

The noise-prone condition of current quantum computing is called the Noisy Intermediate-Scale Quantum (NISQ) era. This term was adapted from the RISC architecture used to describe classical computing devices. Because of this, any present demonstration of quantum speed advantage necessitates noise reduction.

Evaluating a quantum computer’s performance in the NISQ era

A problem becomes increasingly harder to solve with the more known variables it has.

Currently, a computer’s performance is evaluated by scholars by playing a type of game with it to see how quickly an algorithm can guess hidden information.

It can be compared to a version of the TV game Jeopardy, where contestants take turns guessing a secret word of known length, one word at a time. For each guessed work, the host reveals one correct letter before changing the secret word randomly.

The researchers replaced words with bitstrings

In the new study, Lidar and Dr Bibek Pokharel, a Research Scientist at IBM Quantum, achieved the quantum speedup advantage in the context of the bitstring guessing game. They managed strings up to 26 bits long, significantly larger than previously possible, by effectively suppressing errors typically seen at this scale.

On average, a classical computer would require around 33 million guesses to correctly identify a 26-bit string.

A perfectly functioning quantum computer, in contrast, could identify the correct answer in just one guess by presenting guesses in quantum superposition. This efficiency comes from running a quantum algorithm developed more than 25 years ago by computer scientists Ethan Bernstein and Umesh Vazirani.

However, noise present in the NISQ era can greatly hinder this exponential quantum advantage.

Achieving quantum speedup through dynamical decoupling

The team achieved their quantum speedup by adapting a noise suppression technique called dynamic decoupling. Lidar and Pokharel spent a year experimenting, with Pokharel working as a doctoral candidate under Lidar at USC.

Originally, performance was degraded through the application of dynamical decoupling.

However, the quantum algorithm functioned as intended after numerous refinements.

The quantum advantage then became increasingly evident as problems became more complex – with the time to solve problems growing more slowly than with any classical computer.

Lidar noted: “Currently, classical computers can still solve the problem faster in absolute terms.” The reported advantage is measured in terms of time-scaling it takes to find the solution, not absolute time. Therefore, for bitstrings that are significantly longer, the quantum solution will eventually be quicker.

The study showed that with proper error control, quantum computers can execute complete algorithms with better scaling of the time it takes to find the solution than classical computers – even in the NISQ era.