The EU project VEDLIoT shows how Deep Learning and Artificial Intelligence are helping to accelerate the potential of IoT systems.

The Internet of Things (IoT), a network of interconnected devices equipped with sensors and software, has revolutionised how we interact with the world around us, empowering us to collect and analyse data like never before.

As technology advances and becomes more accessible, more objects are equipped with connectivity and sensor capabilities, making them part of the IoT ecosystem. The number of active IoT systems is expected to reach 29.7 billion by 2027, marking a significant surge from the 3.6 billion devices recorded in 2015. This exponential growth requires a tremendous demand for solutions to mitigate the safety and computational challenges of IoT applications. In particular, industrial IoT, automotive, and smart homes are three main areas with specific requirements, but they share a common need for efficient IoT systems to enable optimal functionality and performance.

Increasing the efficiency of IoT systems and unlocking their potential can be achieved through Artificial Intelligence (AI), creating AIoT architectures. By utilising sophisticated algorithms and Machine Learning techniques, AI empowers IoT systems to make intelligent decisions, process vast amounts of data, and extract valuable insights. For instance, this integration drives operational optimisation in industrial IoT, facilitates advanced autonomous vehicles, and offers intelligent energy management and personalised experiences in smart homes.

Among the different AI algorithms, Deep Learning that leverages artificial neural networks is very appropriate for IoT systems for several reasons. One of the primary reasons is its ability to learn and extract features automatically from raw sensor data. This is particularly valuable in IoT applications where the data can be unstructured, noisy, or have complex relationships. Additionally, Deep Learning enables IoT applications to handle real-time and streaming data efficiently. This ability allows for continuous analysis and decision-making, which is crucial in time-sensitive applications such as real-time monitoring, predictive maintenance, or autonomous control systems.

Despite the numerous advantages of Deep Learning for IoT systems, its implementation has inherent challenges, such as efficiency and safety, that must be addressed to fully leverage its potential. The Very Efficient Deep Learning in IoT (VEDLIoT) project aims to solve these challenges.

VEDLIoT: Enhancing IoT systems with efficient Deep Learning

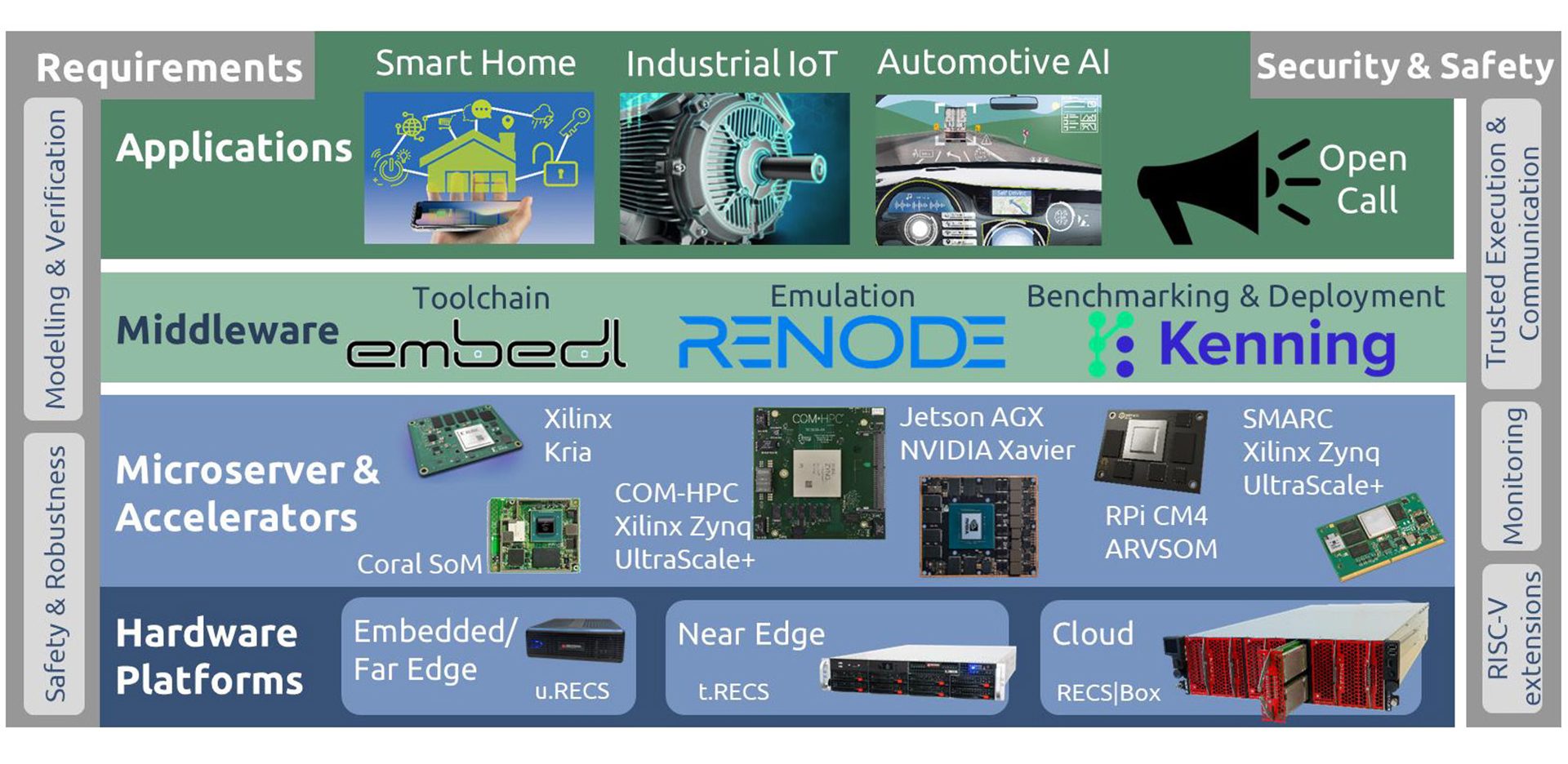

A high-level overview of the different VEDLIoT components is given in Fig. 1. IoT is integrated with Deep Learning by the VEDLIoT project to accelerate applications and optimise the energy efficiency of IoT. VEDLIoT achieves these objectives through the utilisation of several key components:

- Specialised AI accelerators:

These accelerators are employed to optimise energy consumption, enabling significant reductions in energy usage without compromising performance. Additionally, they enhance the overall efficiency of Deep Learning models, enabling faster inference and improved scalability for IoT applications; - Hardware-aware pruning and quantisation: By employing hardware-aware pruning and quantisation techniques, VEDLIoT accelerates Deep Learning models and reduces memory footprint while maintaining high accuracy;

- Safety and security: The usage of hardware-based trusted execution environments ensures the integrity and reliability of the Deep Learning models deployed in IoT systems. Moreover, a specialised architectural framework helps to consider and integrate security and ethical aspects during requirements engineering; and

- Customisable hardware platforms: VEDLIoT leverages customisable hardware platforms, allowing for tailored solutions that meet specific IoT requirements and optimise Deep Learning algorithms.

VEDLIoT concentrates on some use cases, such as demand-oriented interaction methods in smart homes (see Fig. 2), industrial IoT applications like Motor Condition Classification and Arc Detection, and the Pedestrian Automatic Emergency Braking (PAEB) system in the automotive sector (see Fig. 3). VEDLIoT systematically optimises such use cases through a bottom-up approach by employing requirement engineering and verification techniques, as shown in Fig. 1. The project combines expert-level knowledge from diverse domains to create a robust middleware that facilitates development through testing, benchmarking, and deployment frameworks, ultimately ensuring the optimisation and effectiveness of Deep Learning algorithms within IoT systems. In the following sections, we briefly present each component of the VEDLIoT project.

Specialised AI accelerators

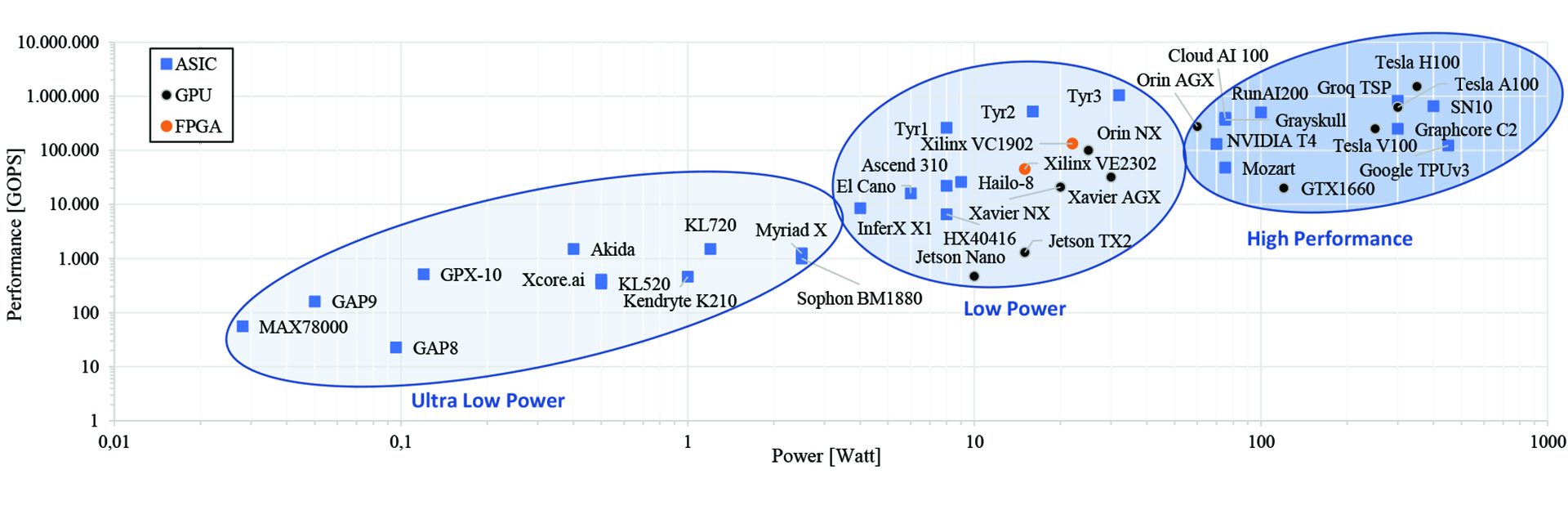

Various accelerators are available for a wide range of applications, from small embedded systems with power budgets in the milliwatt range to high-power cloud platforms. These accelerators are categorised into three main groups based on their peak performance values, as shown in Fig. 4.

The first group is the ultra-low power category (< 3 W), which consists of energy-efficient microcontroller-style cores combined with compact accelerators for specific Deep Learning functions. These accelerators are designed for IoT applications and offer simple interfaces for easy integration. Some accelerators in this category provide camera or audio interfaces, enabling efficient vision or sound processing tasks. They may offer a generic USB interface, allowing them to function as accelerator devices attached to a host processor. These ultra-low power accelerators are ideal for IoT applications where energy efficiency and compactness are key considerations, providing optimised performance for Deep Learning tasks without excessive power.

The VEDLIoT use case of predictive maintenance is a good example and makes use of an ultra-low power accelerator. One of the most important design criteria is low power consumption, as it is a battery-powered small box that can externally be installed on any electric motor and should monitor the electronic motor for at least three years without a battery change.

The next category is the low-power group (3 W to 35 W), which targets a broad range of automation and automotive applications. These accelerators feature high-speed interfaces for external memories and peripherals and efficient communication with other processing devices or host systems such as PCIe. They support modular and microserver-based approaches and provide compatibility with various platforms. Additionally, many accelerators in this category incorporate powerful application processors capable of running full Linux operating systems, allowing for flexible software development and integration. Some devices in this category include dedicated application-specific integrated circuits (ASICs), while others feature NVIDIA’s embedded graphics processing units (GPUs). These accelerators balance power efficiency and processing capabilities, making them well-suited for various compute-intensive tasks in the automation and automotive domains.

The high-performance category (> 35 W) of accelerators is designed for demanding inference and training scenarios in edge and cloud servers. These accelerators offer exceptional processing power, making them suitable for computationally-intensive tasks. They are commonly deployed as PCIe extension cards and provide high-speed interfaces for efficient data transfer. The devices in this category have high thermal design powers (TDPs), indicating their ability to handle significant workloads. These accelerators include dedicated ASICs, known for their specialised performance in Deep Learning tasks. They deliver accelerated processing capabilities, enabling faster inference and training times. Some consumer-class GPUs may also be included in benchmarking comparisons to provide a broader perspective.

Selecting the proper accelerator from the abovementioned wide range of available options is not straightforward. However, VEDLIoT takes on this crucial responsibility by conducting thorough assessments and evaluations of various architectures, including GPUs, field-programmable gate arrays (FPGAs), and ASICs. The project carefully examines these accelerators’ performances and energy consumptions to ensure their suitability for specific use cases. By leveraging its expertise and comprehensive evaluation process, VEDLIoT guides the selection of Deep Learning accelerators within the project and in the broader landscape of IoT and Deep Learning applications.

Hardware-aware pruning and quantisation

Trained Deep Learning models have redundancy that can sometimes be compressed to 49 times their original size, with negligible accuracy loss. Although many works are related to such compression, most results show theoretical speed-ups that only sometimes translate into more efficient hardware execution since they do not consider the target hardware. On the other hand, the process of deploying Deep Learning models on edge devices involves several steps, such as training, optimisation, compilation, and runtime. Although various frameworks are available for these steps, their interoperability can vary, resulting in different outcomes and performance levels. VEDLIoT addresses these challenges through hardware-aware model optimisation using ONNX, an open format for representing Machine Learning models, ensuring compatibility with the current open ecosystem. Additionally, Renode, an open-source simulation framework, serves as a functional simulator for complex heterogeneous systems, allowing for the simulation of complete System-on-Chips (SoCs) and the execution of the same software used on hardware.

Furthermore, VEDLIoT uses the EmbeDL toolkit to optimise Deep Learning models. The EmbeDL toolkit offers comprehensive tools and techniques to optimise Deep Learning models for efficient deployment on resource-constrained devices. By considering hardware-specific constraints and characteristics, the toolkit enables developers to compress, quantise, prune, and optimise models while minimising resource utilisation and maintaining high inference accuracy. EmbeDL focuses on hardware-aware optimisation and ensures that Deep Learning models can be effectively deployed on edge devices and IoT devices, unlocking the potential for intelligent applications in various domains. With EmbeDL, developers can achieve superior performance, faster inference, and improved energy efficiency, making it an essential resource for those seeking to maximise the potential of Deep Learning in real-world applications.

Safety and security

Since VEDLIoT aims to combine Deep Learning with IoT systems, ensuring security and safety becomes crucial. In order to emphasise these aspects in its core, the project leverages trusted execution environments (TEEs), such as Intel SGX and ARM TrustZone, along with open-source runtimes like WebAssembly. TEEs provide secure environments that isolate critical software components and protect against unauthorised access and tampering. By using WebAssembly, VEDLIoT offers a common environment for execution throughout the entire continuum, from IoT, through the edge and into the cloud.

In the context of TEEs, VEDLIoT introduces Twine and WaTZ as trusted runtimes for Intel’s SGX and ARM’s TrustZone, respectively. These runtimes simplify software creation within secure environments by leveraging WebAssembly and its modular interface. This integration bridges the gap between trusted execution environments and AIoT, helping to seamlessly integrate Deep Learning frameworks. Within TEEs using WebAssembly, VEDLIoT achieves hardware-independent robust protection against malicious interference, preserving the confidentiality of both data and Deep Learning models. This integration highlights VEDLIoT’s commitment to securing critical software components, enabling secure development, and facilitating privacy-enhanced AIoT applications in cloud-edge environments.

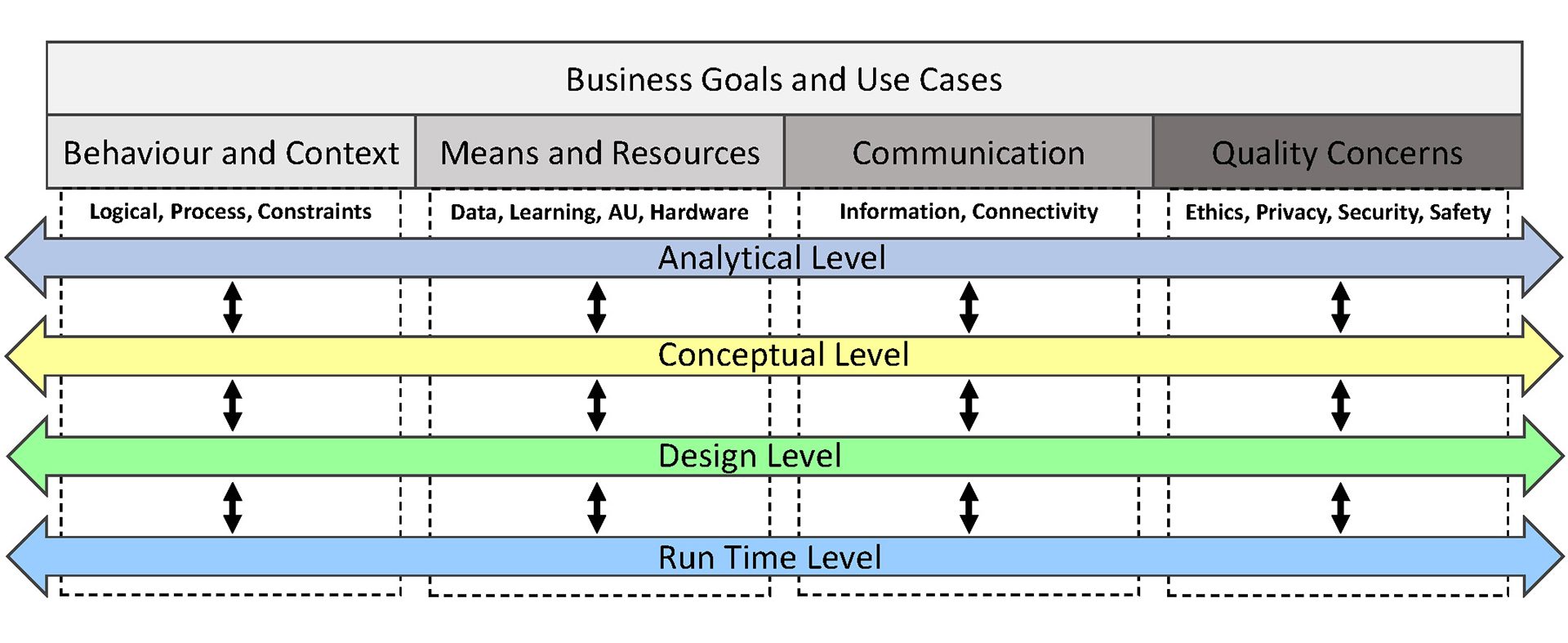

Additionally, VEDLIoT employs a specialised architectural framework, as shown in Fig. 5, that helps to define, synchronise and co-ordinate requirements and specifications of AI components and traditional IoT system elements. This framework consists of various architectural views that address the system’s specific design concerns and quality aspects, including security and ethical considerations. By using these architecture views as templates and filling them out, correspondences and dependencies can be identified between the quality-defining architecture views and other design decisions, such as AI model construction, data selection, and communication architecture. This holistic approach ensures that security and ethical aspects are seamlessly integrated into the overall system design, reinforcing VEDLIoT’s commitment to robustness and addressing emerging challenges in AI-enabled IoT systems.

Customisable hardware platforms for IoT systems

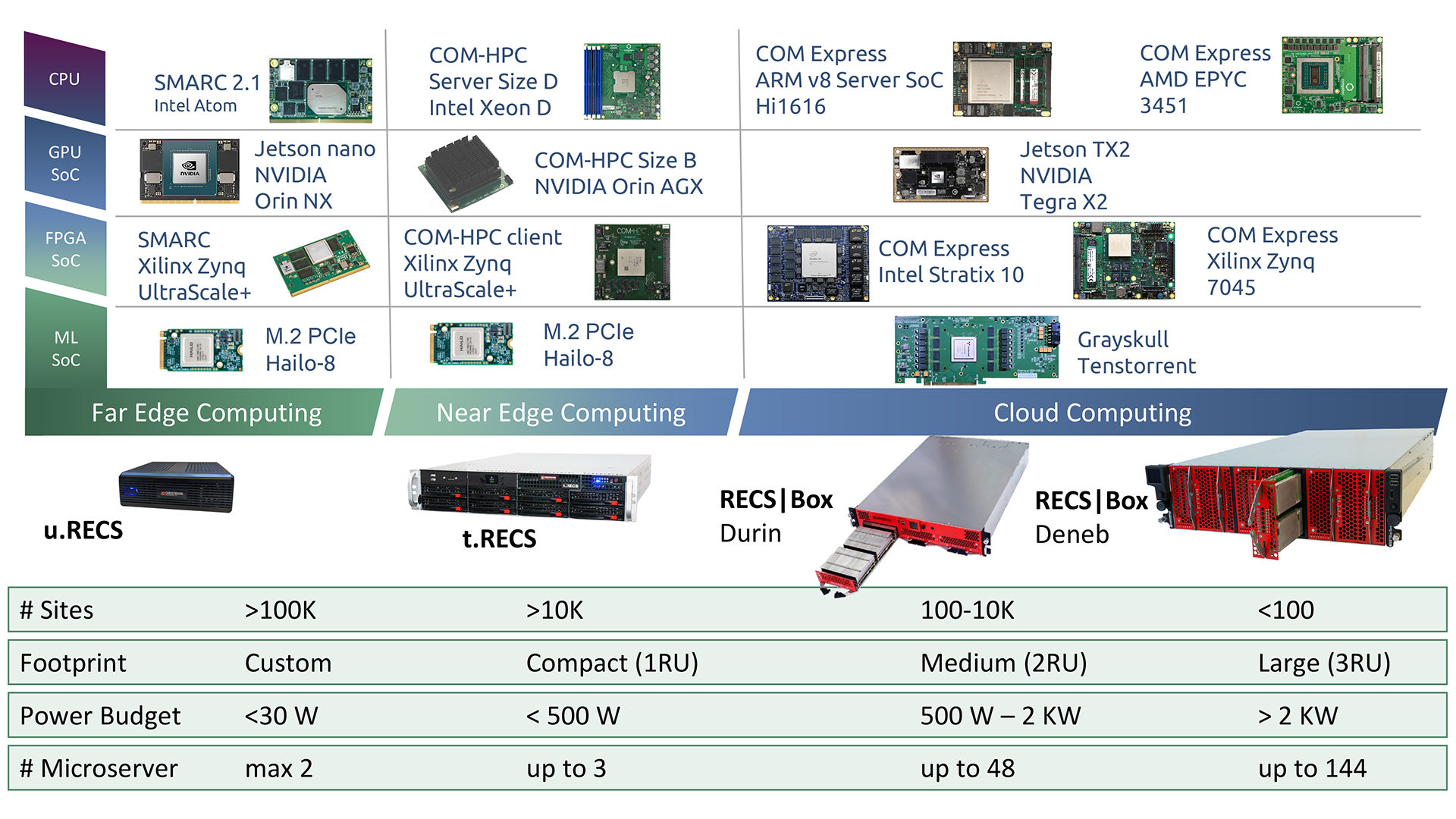

Traditional hardware platforms support only homogeneous IoT systems. However, RECS, an AI-enabled microserver hardware platform, allows for the seamless integration of diverse technologies. Thus, it enables fine-tuning of the platform towards specific applications, providing a comprehensive cloud-to-edge platform. All RECS variants share the same design paradigm to be a densely-coupled, highly-integrated communication infrastructure. For the varying RECS variants, different microserver sizes are used, from credit card size to tablet size. This allows customers to choose the best variant for each use case and scenario. Fig. 6 gives an overview of the RECS variants.

The three different RECS platforms are suitable for cloud/data centre (RECS|Box), edge (t.RECS) and IoT usage (u.RECS). All RECS servers use industry-standard microservers, which are exchangeable and allow for use of the latest technology just by changing a microserver. Hardware providers of these microservers offer a wide spectrum of different computing architectures like Intel, AMD and ARM CPUs, FPGAs and combinations of a CPU with an embedded GPU or AI accelerator.

Outlook

VEDLIoT addresses the challenge of bringing Deep Learning to IoT devices with limited computing performance and low-power budgets. The VEDLIoT AIoT hardware platform provides optimised hardware components and additional accelerators for IoT applications covering the entire spectrum, from embedded via edge to the cloud. On the other hand, a powerful middleware is employed to ease the programming, testing, and deployment of neural networks in heterogeneous hardware. New methodologies for requirement engineering, coupled with safety and security concepts, are incorporated throughout the complete framework. The concepts are tested and driven by challenging use cases in key industry sectors like automotive, automation, and smart homes.

The VEDLIoT project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 957197.

Please note, this article will also appear in the fifteenth edition of our quarterly publication.