Professor Masashi Unoki, from the Japan Advanced Institute of Science and Technology, discusses his work on developing a computational model of the auditory system.

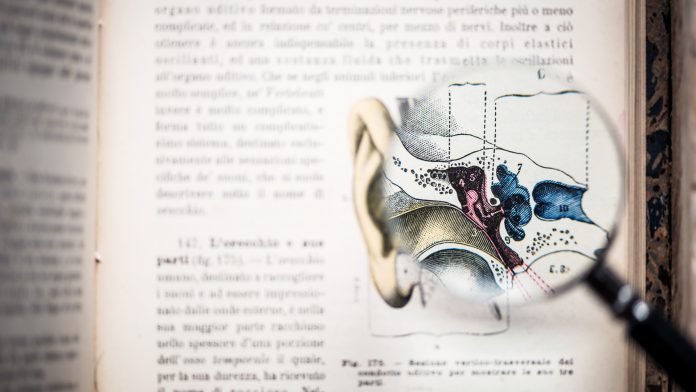

The human auditory system is complex. This is partly the result of the human ear itself being similarly complicated, but also due to the ways in which our brain receives and processes the sounds that we hear. This complexity raises challenges in many areas, including the development of hearing aids for those who experience hearing issues and deafness, as well as in the development of other technologies, such as those designed to enhance the digital security, such as speech recognition software and other developments designed to protect sound files, such as music, from being copied and misused.

Professor Masashi Unoki, from the Japan Advanced Institute of Science and Technology, is working to develop a computational model of the auditory system which will have applications in some of these areas. Innovation News Network spoke with him about some of the challenges he is experiencing and the progress he has made thus far.

What are the main challenges involved in developing a computational auditory model that can mimic the human ability to target specific sounds? Why is it important that this is achieved?

The main aim is to develop a computational model of the auditory system; and there are several challenges to this, including constructing an auditory filterbank that can account for human auditory data (such as masking data), and then to computationally model the ‘auditory scene analysis’, which can recognise sound events as well as our physical environment. Then, we hope to be able to provide auditory-motivated speech signal processing that can mimic human speech perception.

Work here is based on research in psychology, physiology, and information science, and it has a high correlation to the auditory edition of the computational theory of vision proposed by David Marr. If such a computational theory for our auditory system can be constructed, it may not only clarify human auditory functions but also contribute to applications such as pre-processors for robust speech recognition systems and the modelling of the psychoacoustical phenomena. However, efforts thus far to construct such a model by completely utilising Marr’s interpretation have been unsuccessful. This is due to our lack of understanding concerning the psychoacoustical and physiological elements of the auditory system.

The aim of my first project, during my PhD and then as a young researcher, was the development of computational modelling behind the auditory system. Here, I conducted a study on auditory sound segregation. My approach was based on two main ideas:

- Constraints on the sound source and environmental conditions are necessary to uniquely solve the sound segregation problem (ill-posed inverse problem); and

- Psychoacoustical heuristic regularities proposed by Bregman can be used to uniquely solve the sound segregation problem (auditory scene analysis).

Other research has often failed in its attempts to represent real sound segregation such as consonants, burst, and complex sounds. However, based on the research described above, I have since proposed a strategy for the auditory sound segregation problem, such as vowel segregation. This is based on the above two ideas and has resulted in a proposed method to construct a computational theory of auditory sound segregation. However, as it is my aim to develop a highly accurate sound segregation system based on this method, it must also be extended to represent real sound segregation if it is to use reasonable constraints (except for Bregman’s regularities).

This has required a more in-depth study of the algorithm of auditory sound segregation – that is, whether a strategy using reasonable constraints can simulate auditory sound segregation. From this, it has become clear that I need to have clear definitions of and boundaries between psychoacoustical, physiological, and mathematical constraints.

I must also ensure that these boundaries are sufficient to simulate auditory sound segregation so that a computational theory of auditory sound segregation can be constructed. This required a study on the adequacy and necessity of these constraints based on the following steps:

- Step One: a study of the required constraints for auditory sound segregation using computer simulation and hearing tests; and

- Step Two: a study of the constraints for auditory sound segregation using psychoacoustical and physiological tests.

What is the ‘cocktail party effect’? How are you planning to model this and why?

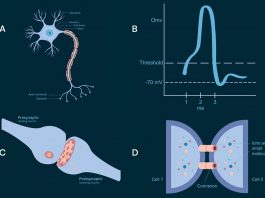

The ‘cocktail party effect’ can be defined as ‘the phenomenon of the brain’s ability to focus one’s auditory attention (an effect of selective attention in the brain) on a particular stimulus while filtering out a range of other stimuli, as when a partygoer can focus on a single conversation in a noisy room.’

Humans can easily hear a target sound that they are listening for in real environments, including noisy and reverberant ones. On the other hand, it is very difficult for a machine to perform the same task using a computational auditory model. Implementing auditory signal processing with the same function as that of the human hearing system on a computer would enable human-like speech signal processing. Such a processing system would be highly suitable for a range of applications, such as speech recognition processing and hearing aids.

The following research has used an auditory filter bank to process speech signals:

- The development of the selective sound segregation model;

- The development of the noise reduction model based on auditory scene analysis; and

- The development of speech enhancement methods based on the concept of the modulation transfer function.

We typically use the gammatone auditory filter bank as the first approximation of a nonlinear auditory filter bank in these projects. Our perspective is to model the ‘cocktail party effect’ and apply this model to solving challenging problems by developing our research projects using nonlinear auditory signal processing.

Can you describe some of these research projects? What are the practical applications of such technologies?

I am involved in several projects now. One that I would particularly like to highlight focuses on mechanisms of how we or perceive the quality of the objects in our environment (known as ‘shitsukan’) via several of our senses in the context of speech perception based on concept of amplitude modulation. Here, we argue that humans should be able to perceive auditory texture from an object via its corresponding acoustical features without predicting room acoustic information when they perceive the objective sound in their daily environments.

In room acoustics, the speech transmission index is used to assess the quality and intelligibility of the objective sound in terms of modulation characteristics. It can thus be inferred that the physical information of amplitude modulation plays an important role of accounting for the perceptual aspect of shitsukan by incorporating the concept of modulation perception with the room acoustics.

This study aims to understand shitsukan recognition mechanisms in the speech perception of the objective sound in room acoustics. In particular, the following four points are being addressed:

- To construct temporal-frequency analysis/temporal-modulation frequency analysis methods for analysing the instantaneous fluctuation of the modulation spectrum to capture temporal fluctuations of amplitude modulation related to shitsukan in speech perception;

- To investigate what the physical features related in auditory shitsukan recognition are, such as tension in speech perception;

- To investigate whether perceptual aspects related to room acoustics affects speech shitsukan recognition of the objective sound; and

- To investigate whether human beings recognise shitsukan in their speech perception of the objective sound by separating out the room acoustic information.

It is hoped that this project will provide important knowledge on how to develop hearing aid systems such as cochlear implants.

Professor Masashi Unoki

Graduate School of Advanced Science and Technology

Japan Advanced Institute of Science and Technology

unoki@jaist.ac.jp

www.jaist.ac.jp/~unoki

Please note, this article will also appear in the third edition of our new quarterly publication.