Professor Tiziano Camporesi, former CERN spokesman for the CMS experiment, discusses the challenges of upgrading the experiment and the importance of the precision frontier.

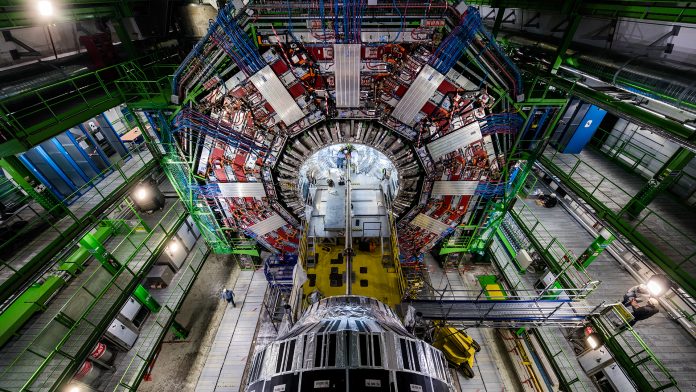

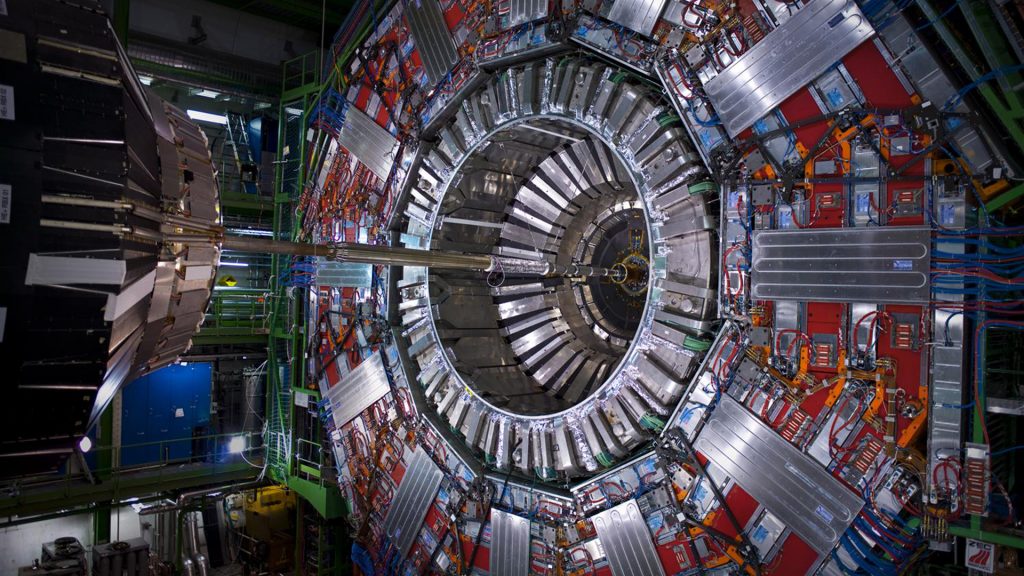

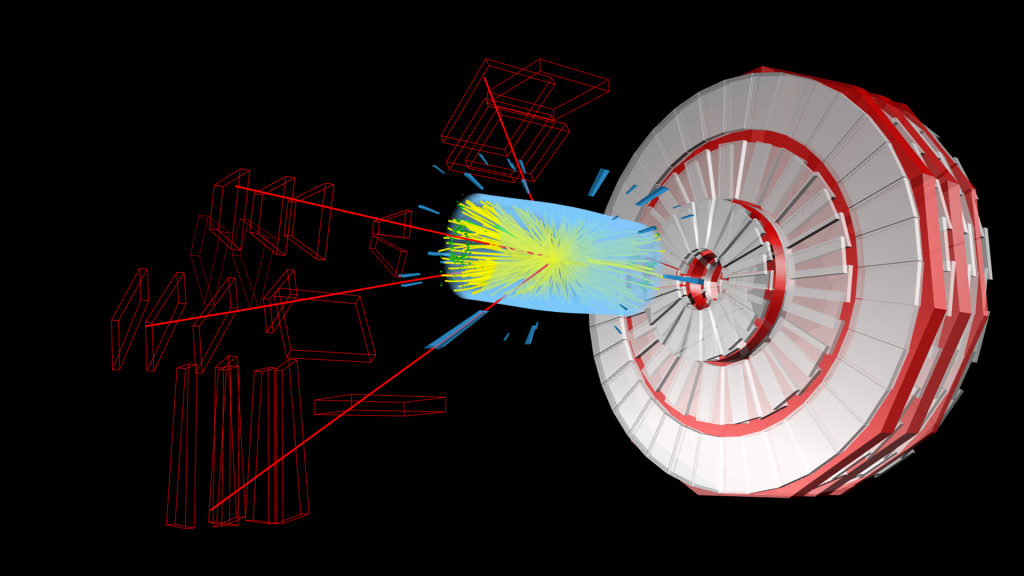

A general-purpose detector at CERN’s Large Hadron Collider (LHC), the Compact Muon Solenoid (CMS) experiment has a broad physics programme, ranging from studying the Standard Model (including the Higgs boson) to searching for extra dimensions and particles that could make up dark matter. The detector is built around a huge solenoid magnet which takes the form of a cylindrical coil of superconducting cable that generates a field of 4 tesla, about 100,000 times the magnetic field of the Earth, which is confined by a steel ‘yoke’ that forms the bulk of the detector’s 14,000-tonne weight.

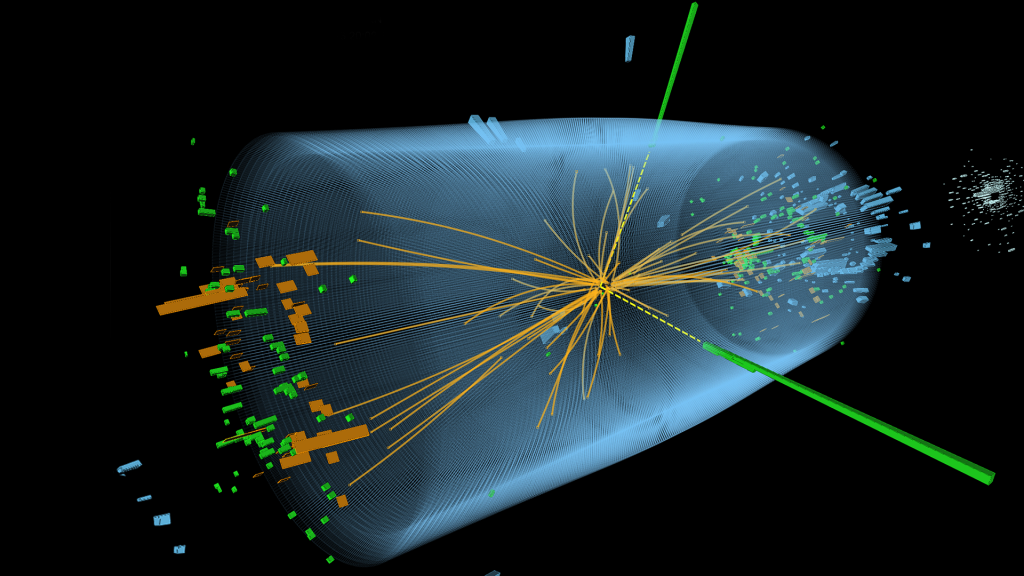

The CMS experiment is one of the largest international scientific collaborations in history. CMS acts as a giant, high-speed camera, taking 3D ‘photographs’ of particle collisions from all directions up to 40 million times each second. Although most of the particles produced in the collisions are ‘unstable’, they transform rapidly into stable particles that can be detected by CMS. By identifying (nearly) all the stable particles produced in each collision, measuring their momenta and energies, and then piecing together the information of all these particles like putting together the pieces of a puzzle, the detector can recreate an ‘image’ of the collision for further analysis.

Innovation News Network spoke to CMS’s former spokesman, Professor Tiziano Camporesi, about the challenges of upgrading the experiment and the importance of the precision frontier.

What is your role at the CMS experiment?

I am currently the team leader of the CERN component of CMS. All of the experiments at CERN, including CMS, are independent from the laboratory itself, and so CMS is an aggregation of some 210 institutes from some 50 different countries, with CERN being one of those participants. As such, just as with any of the other participating labs I am provided with a budget and a team, which I am tasked to manage. I therefore have a team of around 180 people, including students, technicians, engineers, postdocs, and so on; and I control the operational budget for our team, the most important element of which at the moment is the budget for the upgrades.

What have been the biggest successes – and perhaps challenges – at the CMS experiment since the discovery of the Higgs boson?

The experiments at the LHC are considered to be frontier experiments and I believe that we are maintaining that role, even after the successful discovery of the Higgs. However, that discovery has nevertheless changed the focus in the sense that by accumulating increasingly more data and by developing a better understanding of how our experiments, devices, and practices work we are becoming more of a precision experiment, on top of being an intensity frontier kind of experiment.

Perhaps one of the most remarkable things is that since the discovery of the Higgs we have produced another 800 scientific research papers, many of which explore the energy frontier. And while, unfortunately, we have not yet found anything, we are trying to explore and use the energy of the machine to find possible new phenomena in Nature.

A lot of work has gone into achieving very high precision and, of course, the main focus has been understanding, in minute detail, how the Higgs behaves. In fact, compared to our knowledge at the time of the discovery, when we had barely established that the Higgs existed and that it roughly decayed as expected, today we are able to measure things like differential cross sections and how the Higgs decays. We have been studying the different ways the Higgs can be produced. The fact that the Higgs boson’s mass is 125 GeV allows many different ways in which it can decay: this is an enormous amount of work, but also allows very detailed checks on its fundamental nature.

Many theorists were surprised that the Higgs was found to have such a low mass: this is seen as a possible hint of new phenomena and is an even more compelling reason to make precision measurements to check whether there is anything that deviates from the theoretical predictions made by the Standard Model. That is no easy task. Indeed, we are currently at a global level of 10% precision in understanding the differential cross sections if you combine the production and decay mechanisms. To reach the level where we would expect to start seeing whether the Higgs deviates from expectations, we need to achieve precision of 1-2%, so there is more work yet to do. In the future, we expect to accumulate ten times more data than we have today, and that will enable us to explore these precision aspects in terms of trying to really figure out whether there is something beyond the Standard Model.

When we look back at previous experiments and theories, the importance of the interplay between discovery and precision becomes clear. To take the top quark as an example: the top mass was inferred, with better precision than when it was actually measured, by using high precision measurements of characteristics of the Standard Model made at previous particle physics experiments. Thus, to a certain extent at least, the interplay between discovery and precision allows us to decide on the direction we should be looking. To return to the Higgs and physics beyond the Standard Model: theory is essentially at a loss; there is incredible number of theoretical papers trying to explain some of the oddities and to go beyond the Standard Model, but I believe that it is the experimentalists who will be able to define which part of the theoretical world we should be focusing on in the future.

Of course, the importance of precision in our measurements is not only going to play a role in our exploration of the Higgs; it will also continue to prove crucial elsewhere. I believe that we have exploited a lot already in terms of energy, and so now we must look to greater precision for the future.

In 2020, new results from the CMS Collaboration demonstrate for the first time that top quarks are produced in nucleus-nucleus collisions. How important was this and how do you hope it will go on to enable the extreme state of matter that is thought to have existed shortly after the Big Bang to be studied in a new and unique way?

This concerns the quark-gluon plasma; the primordial broth which we believe was the essence of the first instance of the Universe. That plasma has been extensively explored and is now quite well understood as a result of the data collected by the four experiments (CMS, ATLAS, Alice, and LHCb) when the LHC collides heavy ions.

The plasma is generated for an instant when the ions collide, and then it dissipates. As such, to gain a better understanding of how the plasma behaves we use the particles which we already know. We have therefore used quarks produced in the collisions as probes of the nature of the Plasma. While all of the quarks have proved to be useful in providing information on the plasma’s characteristics, the top quark has the potential to provide the most interesting results. Because it is so massive, the top quark is produced and decays while the plasma is still evolving. This will therefore allow us to take snapshots at different moments of the plasma’s evolution, rather than taking an average of the plasma’s behaviour, which is very important to understand the fundamentals of the plasma behaviour.

Looking to the future, the next LHC heavy ion run is expected to generate between six and eight times more data than that which we have already accumulated. With such an increase, we hope to be able to start using the top quark even more as we will have enough statistics to be able to follow in detail the plasma’s evolution. Currently, there are many theoretical models which predict how the quark-gluon plasma evolves, and there is a lot of diversity in those models. If we are able to use the top quark to take snapshots of this incredibly early time in our Universe then we stand to generate a much better understanding.

What role do you hope CMS will play in developing our understanding of dark matter – and, perhaps, the detection of supersymmetric particles?

This is essentially referring to the energy frontier, rather than the precision that we have already discussed – although the two frontiers are becoming increasingly mixed into what is now known as the intensity frontier.

There is a growing consensus that we have now collected all of the low hanging fruit and are now moving on to more difficult challenges. For example, supersymmetry (which, in my opinion, remains the most likely theory that could display dark matter), is both large and complex; there are 153 parameters which determine the details of the spectrum of new particles which could be produced, for instance. As such, a lot of work remains to be done.

There are perhaps two main areas for consideration. The first, which has been quite thoroughly explored, is that supersymmetric particles may be hiding behind the particles we already know. That is difficult because it requires enhanced levels of precision to be able to measure things like missing energy. The second relates to the argument that those particles which are generated by supersymmetry, that eventually decay into dark matter particles, could be particularly long-lived. This presents a challenge because it would mean that those particles would travel inside our detectors for quite a while (relatively speaking). And, of course, our detectors are not optimised for that, which means that we could have been producing supersymmetry all along and not seeing it.

Indeed, protons collide 40 million times per second at the LHC, which means 40 million event pictures are produced every second. But out of them, just a couple of thousand or so are selected, which is known as the trigger. This selection is made on the basis of trying to find the best possible events and uses two main criteria: one is to see if there is something there that we have not seen before (this used to be the Higgs but is now supersymmetry). We did that by triggering on the low hanging fruit. Now that we know they are not there, we need to make our selection much more sophisticated and try to see those things which are living for longer inside the detectors. However, those triggers will need to be achieved in real time, in 3.5 microseconds, and so my team is now working to develop a way to make that happen. That is a small window but it is nevertheless one way we might be able to discover supersymmetry.

Supersymmetry and dark matter are connected, and I believe that our search for the former, in both methods, holds a lot of potential for also discovering the latter.

Dark matter might show itself in different ways; it might, for instance, be a different kind of particle. If this proves to be axions, however, then we will be unable to see it at LHC; that discovery will fall to other experiments. But if it it is a supersymmetric particle, the LHC is well-placed to be able to find it. We have placed limits on the energy that dark matter may lie within, and those limits are pushing what we can do with the machines that we have. But even if we were to see a small hint of dark matter, that would be good news for the future machines.

How has CMS been upgraded for the HL-LHC phase?

It is a race against time. Since the CERN Council approved the upgrade in 2012 which will increase the luminosity of the LHC by a factor of ten, that has presented enormous challenges for our detectors. When the detectors were built, they were designed to last until 2020, so they have already exceeded that and the current accelerator has performed a lot better than expected, which means that it has been exposed to much more radiation than was planned.

The HiLumi increase of a factor of ten in terms of luminosity means a factor of ten increase in radiation, and the current detectors will not be able to withstand that. We therefore have to replace them, which is a large undertaking and will include the central part of the tracker and all of the forward calorimeters.

Because HiLumi will see so many more particles coming into the detector, we also need to replace most of the electronics which are used today to acquire data. And, of course, the electronics currently in use are 20 years old. As such, we are no longer able to source spare parts and that will present a problem as we begin to run out of the spares we procured beforehand. We therefore need to replace them with newer versions. In total, the full upgrade will cost around 300 million Swiss francs over a period of seven years.

Moreover, a main challenge is in developing technology able to withstand the massive amounts of radiation in the experiment. In some ways, that is easier than when we first started; a lot of the technology we use today simply did not exist back in 1992 when people started thinking about the experiments. And other changes mean that we can do things now that would have been unheard of then – such as using silicon to build our new calorimeter, which we can now do as the cost has fallen and because we know how to produce and handle it so that it can withstand the required radiation. Thus, we plan to use silicon to replace numerous elements of the experiment, including the tracker and parts of the forward calorimeter.

Significant progress has also been made in developing techniques which allow us to add another co-ordinate to our measurements of events; time. That is, the time it takes for particles to travel inside our detector is of the order of nanoseconds. Thus, if you want to be able to use time to measure the time of production of a particle then you need to achieve resolutions of the order of tens of picoseconds. When CMS was first built, such a thing was impossible. Now, however, it is within our reach in terms of the necessary electronics and sensors. We are therefore making an addition to our detector which is a device that can measure the time of arrival of the various particles with 10-30 picoseconds resolution. That will essentially allow us to deal with the major challenge from the point of view of data quality at the HiLumi LHC.

The HiLumi machine will achieve the desired level of statistics by making more protons collide at the same time. That, however, generates confusion as you are only interested in a single event. Until now, we have been in a situation where we were handling 30-40 concurrent proton collisions, which proves that we can still achieve a very high level of precision. In the future, that will be increased to 200 distributed over 300 picoseconds. The ability to time when a particle is being produced will allow us to reduce the problem of having so many events and so much data; we will be able to see which tracks are interesting, for example the ones that might indicate supersymmetry, and those particles will have to all be produced/timed together. The rest can be discarded as they represent physics phenomena which we have measured already.

Designing and producing several new electronic chips using state of the art processes and the need to produce new detector components such as the large amount of silicon detectors (the total needed for our upgrade is ~800m2) are real challenges ahead of us. That is not an easy thing to overcome and the pressure comes from the time constraints that we have. Indeed, there are discussions now about whether we can achieve this in time for the official scheduled upgrade of the machine, which is set to stop in 2025 for two years to prepare the upgrade. We are starting now to produce the new detectors as we are finishing the R&D phase.

CERN has the resources to enable R&D on state-of-the-art technologies, and that is something which differs from how it was in the past. Now, the competencies for dealing with the development of electronics with feature sizes of 60 nanometres hardly exists in the academic world. When we were constructing CMS, however, there were several institutes which could develop the electronics we needed. Today, this requires a real investment in terms of engineering and only few labs around the world can make it; we can do it at CERN and it can also be achieved at Fermilab as well as at one or two others. This adds to the pressure we are now experiencing as we work towards the upgrades.

Given these upgrades and the potential of the HiLumi LHC, what are your hopes for the future?

I am convinced that in the future we will reach a level of precision on the measurement of the Higgs which will tell the theorists which direction to take in order to understand fully how Nature works. For example, the probability ruling the way the Higgs decays is dependent on the mass of the decay products, and when conceiving the experiment we assumed that we would not be able to see whether the Higgs decays into a pair of muons, which are relatively light particles, until quite late into the HiLumi LHC. Yet, we have already seen it; we have evidence of it. That, to me, demonstrates the importance of the precision frontier moving forwards.

While I cannot discount the fact that with the previously-discussed improvements in selecting events we may be able to fully explore the supersymmetric world, I believe that there is much more potential in the precision frontier. And the precision frontier not only has an impact on defining what the boundaries of our understanding are, but it is also enabling us to measure quantum chromodynamics (QCD) and electromagnetism to a very high precision in ways that were not available before. We are improving our global knowledge of Nature, and I believe that the precision frontier and the successes it enables will be our legacy to the textbooks of the future.

Professor Tiziano Camporesi

CMS Former Spokesman

CERN

tiziano.camporesi@cern.ch

https://home.cern/science/experiments/cms

https://twitter.com/CERN

Please note, this article will also appear in the eighth edition of our quarterly publication.